Using Java Big Decimal to compute square roots of large numbers

The standard number classes in Java are adequate for most purposes, but occasionally a calculation cannot safely be carried out with the standard classes: I once worked for a bank that insisted calculations be carried out using infinite precision arithmetic. Java caters for infinite precision arithmetic- the BigInteger and BigDecimal classes give infinite precision arithmetic in return for a large performance overhead, inelegant code and the need to worry about whether an operation, such as finding the square root of a number, will terminate or run for ever. Here two iterative algorithms are compared with each other and with the the Java Math.sqrt() method.

The code samples supplied here come with no guarantee whatsoever. Use at your own risk

The Test Problem

One of the problems on Project Euler involved finding the square root of

59370809220861047609922354854410565869030692834007857210054627595408757190

This is 74 decimal digits long and the root is approximately

7705245565253650474334883595061662024.24426667

The implementation of each of the algorithms described here was tested on small numbers the exact value of which could be calculated by hand. As expected the algorithms did not give exact results, for example the square root of 196 was found to be 13.999999........ with some other digits after the ellipse. Apart from this the results were correct.

Big Integer and Big Decimal

These classes provide infinite precision arithmetic and useful methods, such as isProbablePrime() and gcd() not offered by the standard types: long, integer, double etc. Using them for simple operations is cumbersome, and comparing two BigNumbers means a lot of typing. Sometimes just constructing one seems awkward. One reason for this is that the standard operators have not been overloaded.

A key class, MathContext, restricts the number of decimal places to which a calculation is carried out and is essential when a calculation ( √2 for example) will never end. For this investigation the precision was set at one hundred decimal placed.

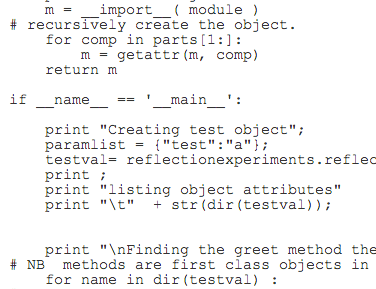

Code for Babylonian Method

public static BigDecimal bigsqrtBabylonian(BigDecimal anumber,String aPrecision)

{

int exponent = anumber.toString().length()/2;

BigDecimal guess = BigDecimal.TEN.pow(exponent);

BigDecimal precision = new BigDecimal(aPrecision);

BigDecimal nextguess = TWO;

BigDecimal theError = guess.subtract(nextguess);

while(theError.abs().compareTo(precision) >0)

{

// nextguess = 0.5*(guess + anumber/guess)

// mc is a class variable.

nextguess = anumber.divide(guess,mc);

nextguess = nextguess.add(guess);

nextguess = nextguess.multiply(HALF);

theError = guess.subtract(nextguess);

guess = nextguess;

}

return guess;

}The "Babylonian" Algorithm

Wikipedia discusses several algorithms for calculating square roots most of which are interesting but of limited use or of historical interest only. A reasonably fast algorithm that would give accurate results was needed. Wikipedia and various other places produced two possibilities, both iterative methods (Iterative methods have the advantage of being intuitive and easy to understand ): The Babylonian or Heron method, which is known to converge rapidly and a binary search method that should be logarithmic in the size of the input. Other algorithms such as Chebyshev polynomials methods were left for future investigations. Further aspects like the scale were not used in this first pass.

The Babylonian method, a case of Newton's method starts with a guess at the square root then refines it iteratively. It is a case of Newton's method.

Let anumber is the number whose root is desired, guess the current estimate of the square root and precision the desired precision. The algorithm is:

guess = initial estimate

nextguess = 1000-0*

while( | nextguess -guess | > desired_precision)

{

nextguess = 0.5*(guess + anumber/guess)

}

return nextguess

This converges rapidly. Normally only a few iterations are needed to reach a high precision ( around a dozen for a simple case, according to Wikipedia).

A binary Chop Algorithm

The alternative was a binary search

- delta = anumber/2

- guess = delta/2

- desiredprecision = 1.0E-100 ( change to taste)

- guesssquared = guess*guess

- if(guesssquared > anumber) guess = guess -delta

- if(guesssquared < anumber) guess = guess +delta

- if( abs(guesssquared - guess*guess) < desiredprecision ) return guess

- delta = delta/2

This needs a multiplication where the Babylonian method has a division and a multiplication. It is also theoretically more elegant. So the guess was these two forces would balance out. This turned out to be wrong.

Timings

Big Decimal Babylonian method precision 10**-100 0.51 milliseconds (averaged over 1000 iterations)

Big Decimal iterative method precision 10**-100 37 milliseconds (averaged over 1000 iterations)

By comparison the standard Java square root algorithm for a double took around 100 nanoseconds and a version of the Babylonian Algorithm for a double that did not use infinite precision arithmetic took around 2 microseconds.

Conclusions

Despite any weaknesses in the method of measurement it seems clear that BigDecimal calculations have an enormous overhead, compared with the limited precision of the square root calculation of the Java Math package. The only surprise is the magnitude of the overhead

It is also apparent that for high precision calculations the “Babylonian” method converges much faster, for the same precision, than the iterative methods though this advantage is reduced as the precision decreases. The same effect is seen for the fixed precision calculations of the Java Math package.

BigDecimal and Big Integer calculations should only be used when unavoidable or when speed is not critical but precision is critical.

The original goal of deciding whether the “Babylonian” or “iterative” algorithm is faster was achieved. The “Babylonian” algorithm won decisively. It seems unlikely changing implementation details will alter this.